Below are some representative publications in each research interest. Please visit Publications page for a full list.

Contact-rich manipulation

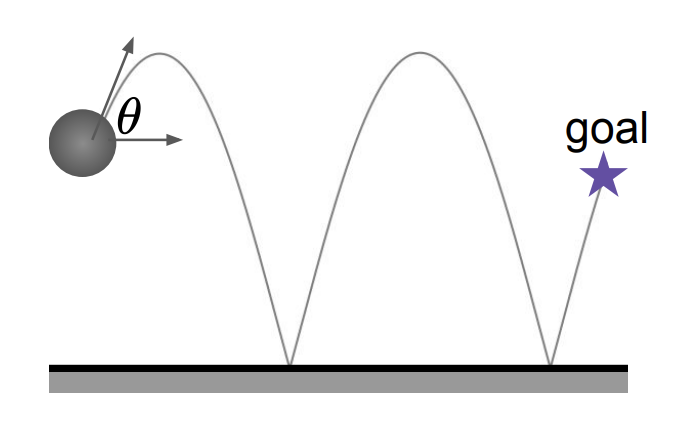

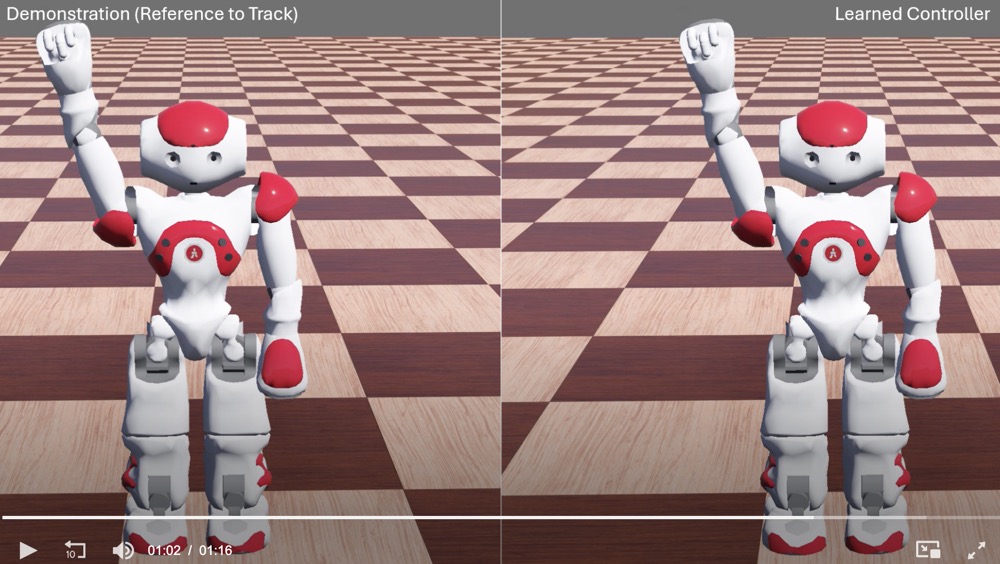

Adaptive Barrier Smoothing for First-Order Policy Gradient with Contact Dynamics

International Conference on Machine Learning (ICML), 2023

Autonomy alignment

Fundamental methods in robotics

- Optimal control, motion plannig, reinforcement learning

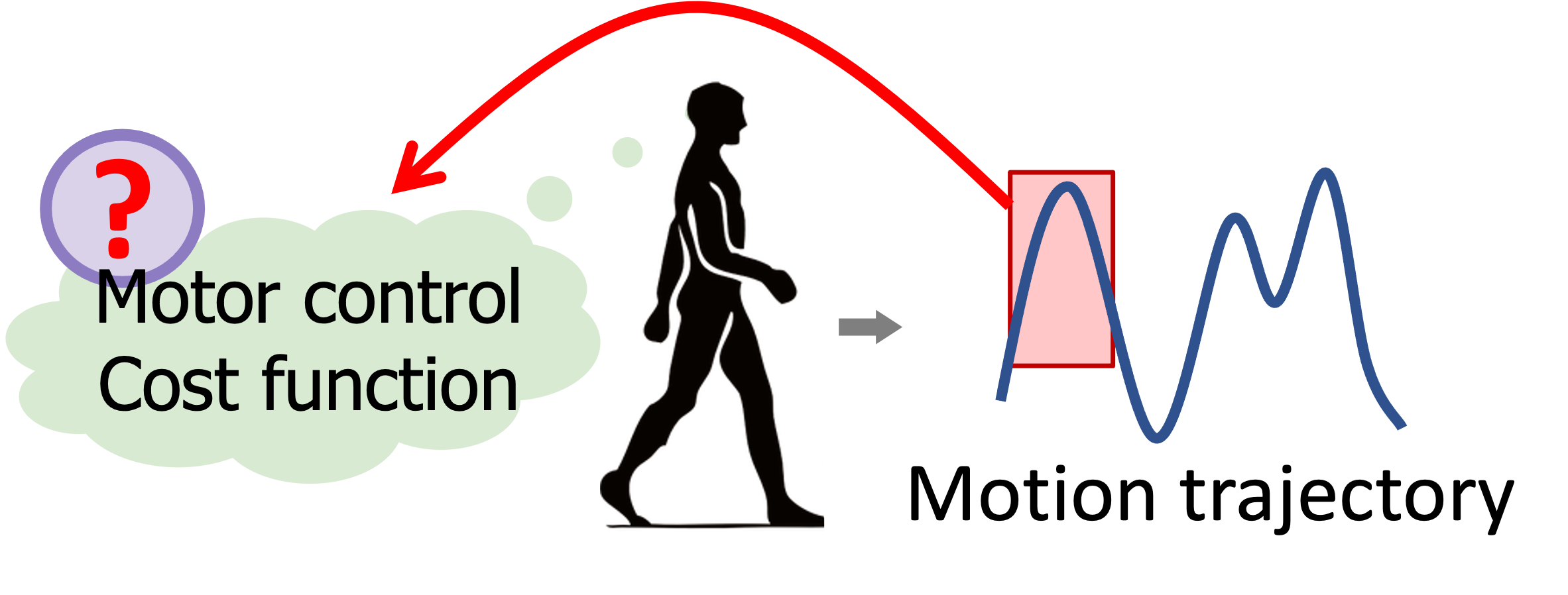

- Differentiable optimization, inverse optimization

- Hybrid system learning and control

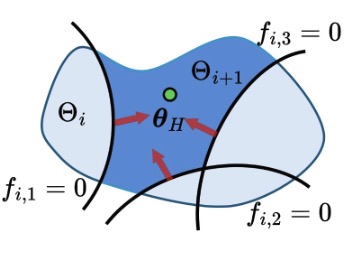

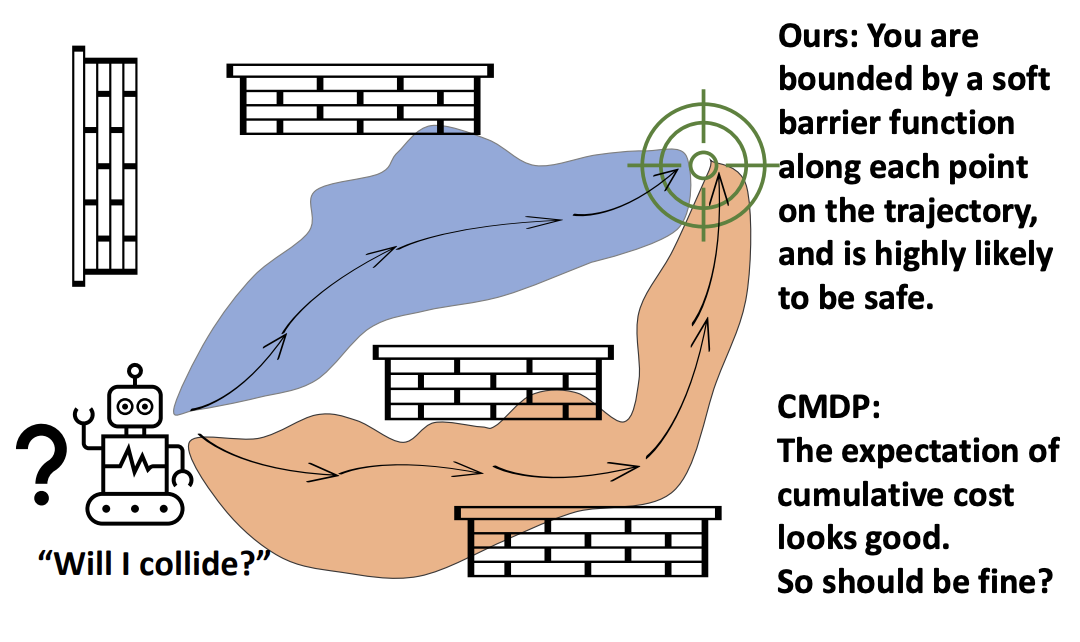

Enforcing Hard Constraints with Soft Barriers: Safe-driven Reinforcement Learning in Unknown Stochastic Environments

International Conference on Machine Learning (ICML), 2023

A Differential Dynamic Programming Framework for Inverse Reinforcement Learning

IEEE Transactions on Robotics (T-RO), 2025